We have looked at the “Characterizing and Optimizing Remote Persistent Memory with RDMA and NVM” ATC’21 paper. This paper investigates a combination of two promising technologies: Remote Direct Memory Access (RDMA) and Non-Volatile Memory (NVM). We have discussed both of these in our reading group before.

RDMA allows efficient access to the remote server’s memory, often entirely bypassing the remote server’s CPU. NVM is a new non-volatile storage technology. One of the key features of NVM is its ability to be used like DRAM, but with the added benefit of surviving power outages and reboots. Typically, NVM is also faster than traditional storage and gets closer to the latency of DRAM. However, NVM still significantly lags behind DRAM in throughput, and especially in write throughput. The cool thing about NVM is its “Memory Mode,” which essentially makes Optane NVM appear like a ton of RAM in the machine. And here is the nice part — if it acts like RAM, then we can use RDMA to access it remotely. Of course, this comes with a catch — after all, NVM is not actual DRAM, so what works well to optimize RDMA under normal circumstances may not work as well here. This paper presents a handful of RDMA+NVM optimizations from the literature and augments them with a few own observations.

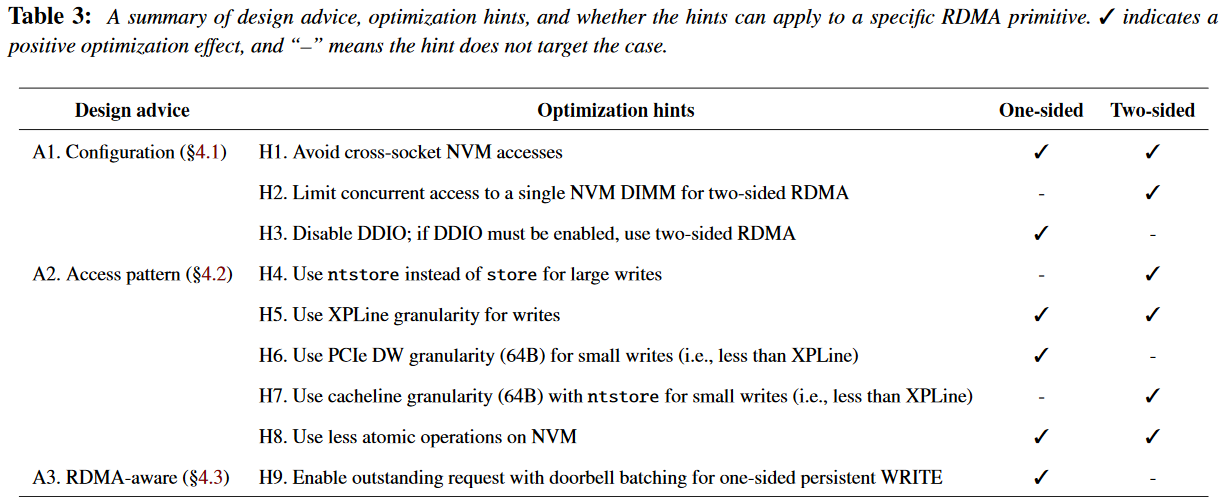

Below I put the table with optimizations summarized in the paper. The table contains the literature optimizations (H1-H5), along with the author’s observations (Parts of H3, H6-H8). One additional aspect of the table is the applicability of optimization to one-sided or two-sided RDMA. One-sided RDMA does not involve a remote CPU. However, two-sided RDAM uses a remote server’s CPU.

H1: Accessing NVM attached to another socket is slow, so it is better to avoid it. We have discussed NUMA a bit in a previous paper to give a hint at what may be the problem here. The paper raises some concerns on the practicality of avoiding cross-socket access, but it also provides some guidance for implementing it.

H2: For two-sided RDMA that involves the host’s CPU, it is better to spread the writes to multiple NVM DIMMs.

H3: Data Direct I/O technology (DDIO) transfers data from the network card to the CPU’s cache. In one-sided RDMA, that will cause the sequential writes to NVM to become Random, impacting performance, especially for large payloads, so it is better to turn DDIO off. However, there are some implications for touching this option, and the paper discusses them in greater detail.

H4: ntstore command bypasses the CPU caches and stores data directly to the NVM. So this can make things a bit faster in two-sided RDMA that already touches the CPU.

H5: This one deals with some hardware specs and suggests using writes that fill an entire 256 bytes XPLine (the data storage granularity). I am not going to explain this any further. However, the paper mentions that this optimization is not always great since padding the entire XPLine (and sending it over the network) incurs a lot of overhead.

H6: For one-sided RDMA, it is more efficient to write at the granularity of PCIe data word (64 bytes) for payloads smaller than XPLine. This is the granularity at which NIC operates over the PCIe bus.

H7: Similar to H6, for two-sided RDMA, write at the granularity of a cache line (64 bytes on x86 architecture).

H8: atomic operations, such as read-modify-write, are expensive, so for best performance, it is better to avoid them.

H9: Doorbell batching helps when checking for persistence. In one-sided RDMA, there is no easy way to check if data has persisted, aside from performing a read. This procedure incurs two round-trips and obviously hurts performance. Doorbell batching allows to send a write and a read at once but delays the read on the remote until the write completes, avoiding two separate round-trips.

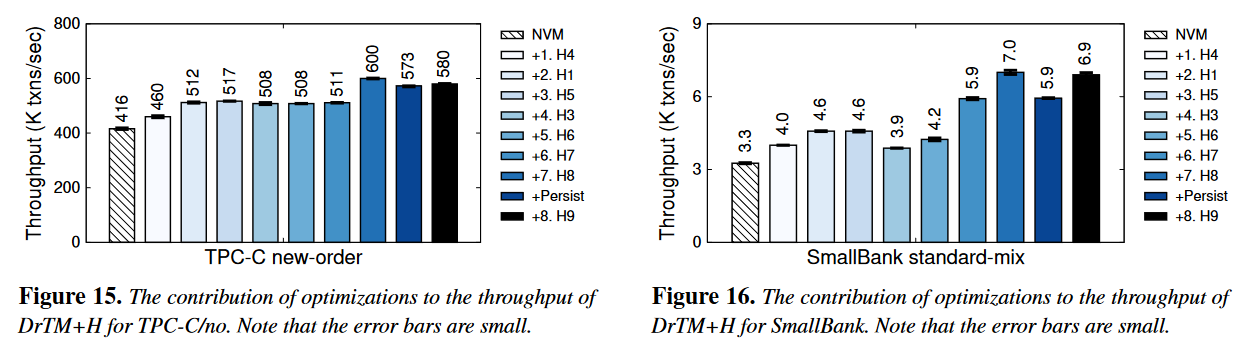

The figure below illustrates how the optimization changes the performance when added one at a time. It is worth noting that some optimizations can actually slow the system down, so it is important to understand the specific circumstances of the workload to make the proper decision on using some of the suggestions from this paper.

The paper has a lot more details and discussions of individual optimizations. It is also full of individual evaluations and experiments for different optimizations.

Brian Luger did an awesome presentation of the paper, which is available on YouTube:

Discussion

1) Only writes. The paper focuses on a bunch of write optimizations, and no read ones. The authors explain this by saying that NVMs read throughput is very high and exceeds the NICs bandwidth, so the network will be a bottleneck for reading. On the write side of things, however, the bottleneck is the NVM itself, and it is not held up by other slower components, making write optimizations very desirable. At the same time, if the network catches up with NVM bandwidth, then we may need to play the same optimizing game for reads.

One thing to mention here is that read-to-write ratios will play a huge role in perceived improvement from optimizations. The paper has not explored this angle, but if the workload is read-dominated (like many database workloads), then all the write optimizations will be left unnoticed for the overall performance. For all the fairness, the paper does use realistic workloads with writes and reads for their evaluation.

2) Vendor-Specific? Currently, there is pretty much one vendor/product out there for NVM — Intel Optane. So a natural question is how many of these optimizations will transfer to other NVM products and vendors.

3) Vendor Benefit. Our final discussion point was about the exposure of RDMA+NVM technology in the cloud. It is still very hard/expensive to get VMs with RDMA support. Having the two technologies available in the public cloud is even less probably now. This means that RDMA+NVM will remain the technology for the “big boys” that run their own data centers. Vendors like Microsoft, AWS, Google, etc. can benefit from this tech in their internal products while making it virtually inaccessible to the general open-source competition.