In our 54th reading group meeting, we were looking for an answer to an important question in the distributed systems community: “What about Raft?” We looked at the “Paxos vs Raft: Have we reached consensus on distributed consensus?” paper to try to find the answer. As always, we had an excellent presentation, this time by A. Jesse Jiryu Davis:

The paper compares Multi-Paxos and Raft protocols, but it does so through the lens of Raft and uses Raft terminology to describe Paxos. This can be very handy for people who first learned consensus through Raft. The paper mentions that the two protocols are very similar, especially in the “happy-case” operations. The important differences come in the leader-election phases of the protocols. In Raft, a new leader must be a fully caught-up replica with the longest log, while Multi-Paxos can pick any node as a leader and recover missing log entries. The authors argue that this Raft behavior is good for efficiency — the new leader can start quickly since it does not need to learn any missing entries.

When it comes to understandability, the paper says that Raft is “slightly more understandable than Paxos.” I suppose this comparison comes after expressing/explaining Paxos in Raft terminology, and not based on the original explanations.

Personally, Paxos is more understandable to me, since I absolutely hate the quirk of Raft when a majority-accepted but not committed value may get “chopped off” upon some intricate leader churn (See Figure 8 in the original paper). This ties to the sequential commit requirement in the normal case, and different commit rules that apply upon leader churn, which all tie to the leader election procedure itself. How is it more understandable? In contrast, Paxos never loses a majority-accepted value and has one commit rule, although it allows committing out-of-order (the execution of committed operations still must follow the log order).

I won’t go further into summarizing the paper, it is a good educational read, so I do not want to spoil it.

Discussion.

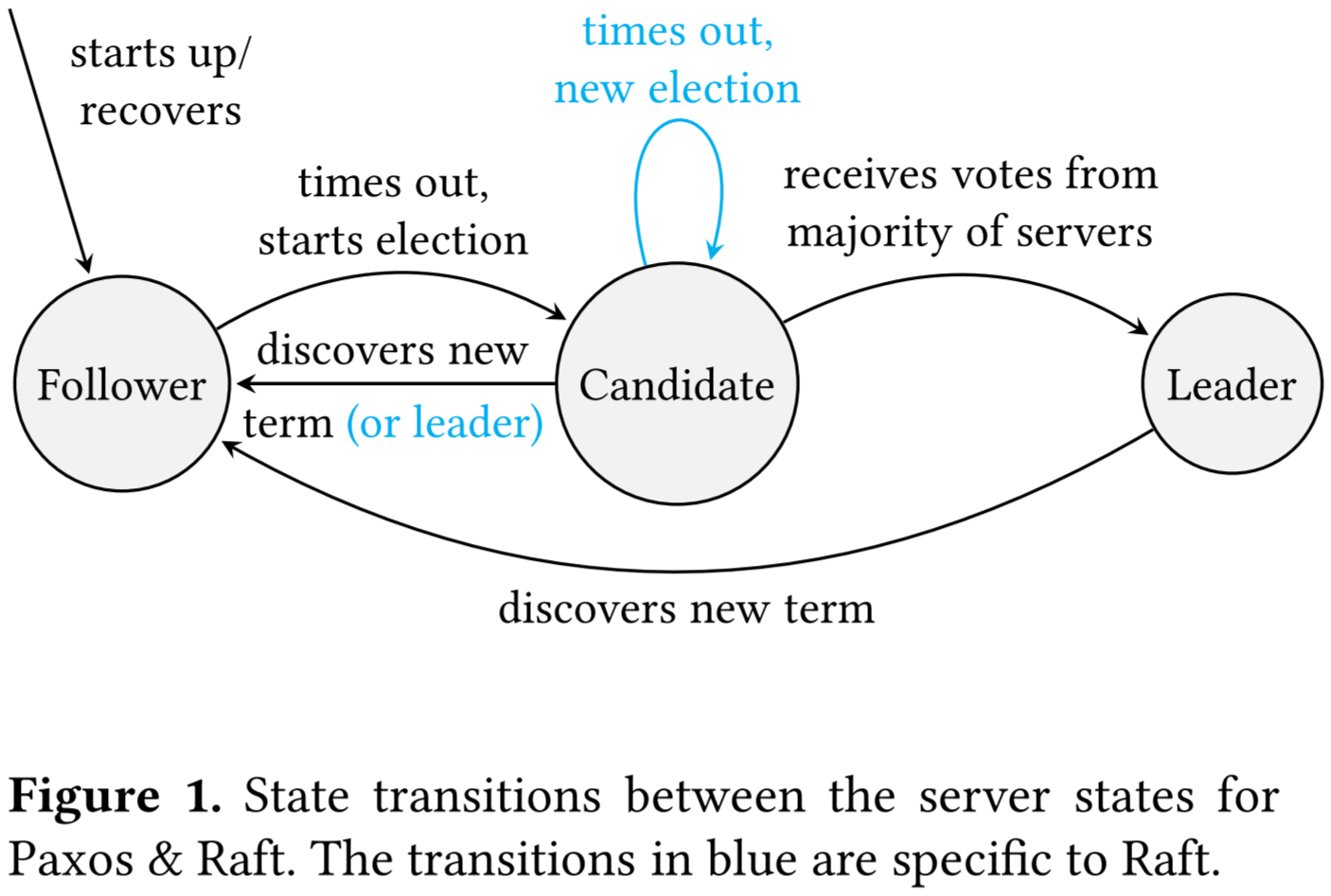

1) Orignal Paxos Papers as a Source of Confusion? Needless to say, Lamport’s Paxos original paper does not have the clearest description of the algorithm. This somewhat extends to Lamport’s Paxos Made Simple paper, and Paxos Made Moderately Complex by Robbert van Renesse. However, the relative difficulty of understanding Paxos from these later papers may not lie in the algorithm’s description itself, but in the language used. Raft paper is written with an engineering audience in mind, and operates with primitives, like RPCs, that can be used in programming languages right away. Raft is described in operational concepts, such as server states with clear boundaries and transitions between states — first, do leader election, then do log replication, and finally, go back to leader election if needed. These may have a great appeal to students and professionals. We teach computer science from the code first (it is a separate discussion whether this is the right way to approach distributed systems though), and describing protocol closer to code has a certain appeal.

Multi-Paxos is described in more abstract terms. Multi-Paxos is not as “operational.” For example, the leader election gets blurred a bit with replication as the leader may need to fill some slots before becoming fully active. Transitions back to leader election are also a bit murkier compared to Raft.

I think it is great to have this abstract Paxos algorithm that we can shape and implement in a variety of ways. This leads us to uncover new ways it can be refined and/or implemented — take a look at Flexible Paxos result.

2) Reference Implementation(s). To continue the previous point, a great appeal of Raft are many (reference) implementations. This again appeals greatly to engineers, who can look at the code and run it, trace through breakpoints, and learn it hands-on. Another point that was mention in the discussion is a kind of herd effect. Once a good production-grade implementation is available, more people will just take it and use it.

3) Leader election efficiency. We spent a bit of time discussing the leader election efficiency point from the paper. This is an important feature that may come in handy in disaster recovery performance. The question is how realistic it is to have followers that significantly lag behind. These lagging followers may put pressure on the leader, as the leader cannot compact the log while some follower is struggling behind and consumes it, which may cause higher memory and/or storage consumption. But of course, this applies only to severely lagging machines, and the performance hit from catch up after the leader election can be noticeable even with a less severe staleness. In the discussion, it was mentioned that having hours of staleness is possible on some systems!

On the other hand, the Cloudflare outage is a good illustration of how Raft’s leader election fails at liveness unless we add a bit more complexity to the protocol (and removing understandability?). So its good performance may get compromised under some conditions. Here is the paper on this too: “Examining Raft’s behaviour during partial network failures.” And no surprise, it is by Heidi Howard.

4) Is Raft an Implementation of Multi-Paxos? Given a more abstract description of Multi-Paxos and a more direct (and less flexible?) approach taken by Raft, one may think that Raft is, in a sense, an implementation of Multi-Paxos. Both protocols operate on Majority quorums, have a stable leader. They have the same communication pattern as the leader talks to followers. Differences appear in the leader election, but are these big enough differences? Can one implement something Raft-like from an abstract Paxos blueprint?

The differences may appear big, but they also may appear as a refinement of Paxos.

- Can I elect a leader without having to copy data from followers? Sure, if you pick the most up-to-date follower to be the leader.

- How do I know which one is the most up-to-date? Well, look at the latest committed log item.

- Wait a sec, in Paxos I can commit out of order, so which one is the latest committed log item? Just don’t commit out of order…

5) Porting Paxos Optimizations to Raft. As mentioned in our presentation, another paper tried to answer Paxos vs Raft question. That paper, titled “On the Parallels between Paxos and Raft, and how to PortOptimizations“, looked at a number of Paxos optimizations and tried to figure out if they can apply to Raft. The authors there concluded that for the most part, they apply. And they also noted how the two protocols are similar and can be made more similar by tweaking the implementation of Multi-Paxos to be more Raft-like (which goes back to point (4) above).

6) Industry Use of Raft vs Multi-Paxos. The industry loves Raft. For the reasons I already mentioned above: reference code, good existing libraries, the description that is closer to the code. One notable company that has been using Multi-Paxos is Google. Their Spanner database is based on Multi-Paxos. However, its open-source cousins (CockroachDB and YugabyteDB) rely on Raft. We were wondering how much more difficult it was to implement Multi-Paxos in production compared to Raft in this similar databases.

Easy adoption is a great point for use in the industry, especially when businesses lack the expertise to use formal methods to check their protocols or protocol variants. However, this may be changing now, as formal methods, like TLA+, are picking up more widespread adoption. We see that companies start to modify their Raft protocols in a variety of ways, and remain confident in their safety and liveness properties through model-checking. This adoption of formal methods may bring Multi-Paxos and Raft closer together, as a more abstract way of thinking about these protocols may highlight their similarities. For example, Jesse mentioned how they use TLA+ to build confidence in their variant of Raft.

7) Other Consensus Protocols. Another important topic we discussed relates to other consensus protocols. There are plenty of consensus-based replicated state machines, yet we still pretty much use Paxos or Raft. Solutions like EPaxos and its extension have been around for some time. Is the problem that they are hard to implement? Are they difficult to understand as well? EPaxos is not an easy protocol for sure, but it has a reference implementation. Or maybe these protocols are not as good in practice as the papers promise…

8) Teachability. If the community has a good understanding and description of Paxos, why not use it in classrooms and textbooks? There are not that many good distributed systems textbooks, and it seems like a lot of faculty prefer to teach from papers and their own notes. The same goes for engineering folks who have to pick up on distributed systems state-of-the-art through an extensive literature survey.

We have mentioned a few sources to help with distributed systems such as the “Designing Data-Intensive Applications” book by Martin Kleppmann or MIT distributed systems course.