Metastable failures in distributed systems are failures that “feed” and strengthen their own “failed” condition. The main characteristic of a metastable failure is a positive feedback loop that keeps the system in a degraded/failed state. These failures are hard to spot, as they always start with some other distraction — some trigger event that nudges the system over its operating limit or capacity. However, fixing the trigger is not enough, and engineers realize this too late — so late that sometimes it takes days to stabilize the system and return to the pre-failure level of operation.

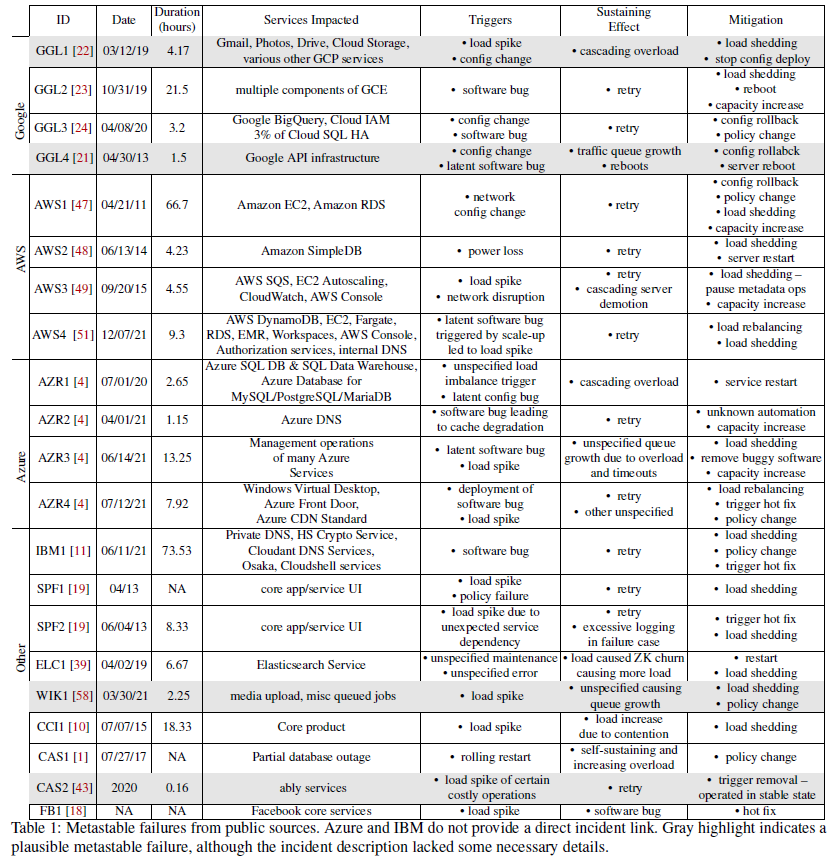

I already blogged about this topic last year in the context of our original metastable failures paper from HotOS’21. Now we have the “improved and extended” version out at OSDI’22, and oh boy, we have a lot of juicy stuff there. That failure that took days to recover? I did not make it up. My students spent quite some time going through hundreds, if not thousands, publicly available post-mortem reports for different systems to identify a handful of impactful metastable failures. We are sure there are more metastable failures out there lurking in these bug and failure reports. In fact, we had significantly more candidate failures, but many post-mortems simply do not have enough information to be absolutely sure metastability played a role.

Anyway, above is a table from the paper that lists examples of metastable failures in the wild. Some last for an hour, and some take days to resolve. Many examples are due to retries and retry-induced load amplification. See, when a system becomes slow (i.e., some server failure or transient network issue), it is likely that latency on some requests will start to grow, and it may reach the point at which the client application gives up the request and retries. Retries are great for dealing with transient issues, but they create more load for the system by potentially repeating the same request over again. When the system is already overloaded due to the ongoing issue, retries do not solve any real problem and create more unnecessary load. More load means higher queuing times, higher latency, and more retries. This vicious cycle can continue even after the original trigger issues are fixed, and, in many cases, there is no escape from this except load shedding.

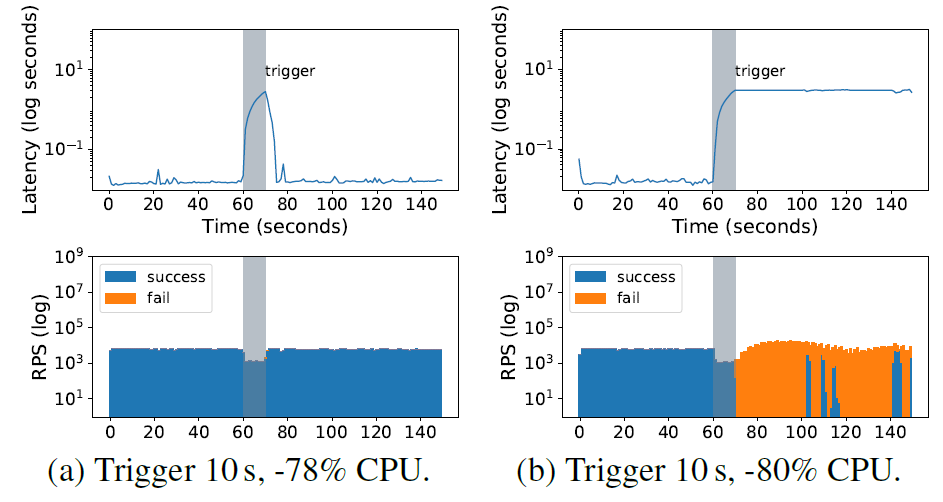

We managed to reproduce a toy example of retry-induced failure using MongoDB. It is a bit concerning that we were able to do so at a small scale, but our experience also showed that not all hope is lost. See, as it turns out, modern systems are good at load shedding — when the request queues up for too long and is too old by the time server can handle it, such an old request can be dropped without wasting further resources. Our experiment here used a very aggressive retry policy that had to overrun the load-shedding mechanisms of the underlying database. That being said, sometimes the difference between a failure and recovery can be down to minuscule difference in the trigger. The figure here shows a failure when a trigger took out 80% of CPU capacity, and a successful trigger recovery when the trigger reduced CPU capacity by 78%.

Now, of course, retries are not the only cause of the self-feeding failure loops, but retries are something most engineers are familiar with, so the post-mortem reports tend to have more details on that topic. Potentially, this is one reason for observing so many retry-induced metastable failures in the wild. However, we saw other feedback loops. For example, one of the failures in the list was caused by excessive logging on the error path, which in turn caused the system to slow down and have more requests enter that same error path.

Seeing the examples in the real world and reproducing them is important. Our HotOS paper claimed that reproducibility at a small scale is often challenging. In this new paper, we have a handful of metastable failure scenarios reproduced at a scale of at most a few VMs. However, a good chunk of the paper is about generalizing what we have learned from the observations. We developed a “reasoning framework” for metastability and metastable failures.

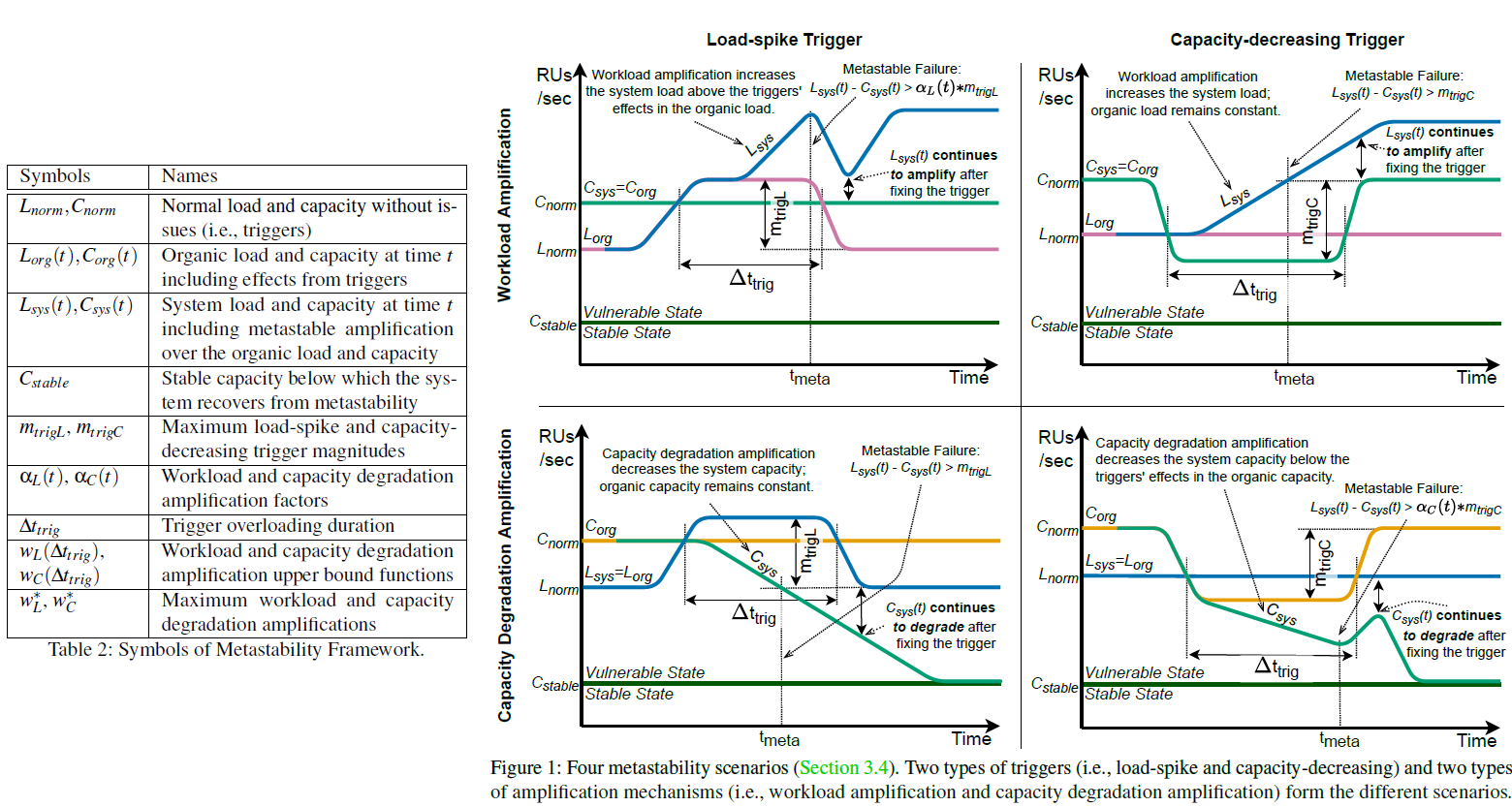

The two important characteristics of metastable failures are triggers and amplification effects. On the trigger side, we can have a load-spiking trigger and capacity-decreasing triggers. The former trigger type temporarily increases the load on the system above some pre-trigger level. An example of this can be higher than expected demand from users. The later trigger type degrades the systems’ serving capacity. An example of this trigger is a server failure that takes some capacity out.

Similarly to triggers, there are two types of amplification effects: workload amplification and capacity degradation amplification. Both feedback loops kick in after a system enters an overloaded state — having an insufficient serving capacity for the current offered load. The workload amplification seems to be the more common type in the examples we found so far. It creates even more load in response to overloaded. The vicious retry loop from earlier is an example of workload amplification. The other type, capacity degrading amplification, reduces the serving capacity of the system in response to an overload. This can happen, for instance, in cached systems and applications, when losing some cache can result in downstream system overload that either prevents cache rebuild or downright causes more cache to expire and go stale.

The figure above shows how these two types of triggers and two types of amplification mechanisms can combine. Also, an important point to note — it is possible to have a combination of more than one trigger and more than one amplification mechanism, something that we have seen in the wild. As far as experiencing metastable failure, a lot depends on how the triggers and amplification mechanisms interact and how much load was offered to the system before the failure sequence began.

Small triggers that do not last long or do not have a big enough magnitude (i.e., a trigger that does not overload the system too much) may be less likely to cause an issue. On the other hand, a trigger that lasts an hour and takes out half of the servers is a much bigger problem. Of course, the magnitude of the trigger is not an absolute metric — your system may be fine even when you take out half the servers if it was operating at 25% of its maximum capacity before the trigger event took place.

Triggers are not metastable failures. They are failures on their own that can cause availability and performance issues for clients or users, but they are only the beginning of the metastable failure sequence. The amplification mechanisms are those feedback loops that start after the system is overloaded due to a trigger. Amplification leads to a metastable failure after the system’s overload is amplified so high that even fixing the trigger (i.e., returning lost capacity, or solving the load spike) does not solve the problem. The figure marks those metastable failure points. Naturally, the type of amplification matters a lot. A system with “slow” amplification loops that add little overload over time is better than systems with “fast” amplification loops. In both cases, we can have a metastable failure, but a slow amplification mechanism gives us more time to deal with the trigger.

Anyway, what are the lessons here? For one, I cannot stress the importance of understanding the mechanisms involved so that we can operate the systems better. Maybe, it is not a good idea to squeeze every single bit of capacity from the system/hardware? Having some slack resources allows us to absorb smaller triggers and gives time before the system enters the metastable failure for bigger triggers. The same goes for making systems with predictable performance characteristics. If some code path is significantly slower than the other, a sudden switch to a slower code path can act as a trigger, since it is essentially a capacity degradation.

Load shedding is the solution to metastable failures. All recovery efforts included some load shedding mechanisms. After all, to bring the system back into operation, its load needs to decrease sufficiently as to stop the amplification. But we do not need to leave load shedding only for recovery efforts after it is already too late. More aggressive load shedding done ahead of time or early after the trigger overloaded the system can save from a bigger failure — it is better to lose some requests and piss some clients/users than lose the entire system and impact everyone. Here I can also lump load prioritization — prioritize the work that will raise the system’s goodput if possible.

Another point is to account for the human factor — about half of the observed failures in the wild had some direct human involvement, such as rolling out bad configuration or buggy code. It is important to test and deploy configuration the same way as software. This is something that many systems still seem to neglect. Proper testing of new code is paramount, including stress testing. And, of course, do not deploy on Friday… we have seen a failure in which a weekend load/traffic reduction masked increased resource usage of a new version deployed on Friday. The system failed after the load returned on Monday.

Another interesting observation is the “fix-to-break” behaviors. On a few occasions, engineers increased the severity of the amplification mechanism (feedback loop) after fixing an outage, leading to a more severe failure in the future. I refer to this as a “fix-to-break” pattern; it is a good illustration of why we need to understand metastability in order to avoid causing failures.

As always, the paper has way more details. We talk a lot about definitions and formalism of metastable states and failures. We have a handful of distinct reproductions with triggers and amplifications caused by retries, cache failures, and garbage collections. And finally, we include some real-world first-hand details on metastable failures at a large internet company.